All the different parts of your computer work to do what you ask of them without much delay or struggle. Each of those parts has to connect and communicate with the others in multiple ways.

Sometimes the connection doesn’t work out the first time you hook things up; other times, a part will inexplicably become disconnected. If the rest of your build doesn’t detect your graphics card, you’ll have to troubleshoot to make the system work together in harmony again.

Why is My GPU Not Detected?

Until you’ve ruled out some causes through troubleshooting, it’s difficult to say precisely why a GPU would not be detected. However, there are a few reasons to consider. If one of them rings a bell with you, it could give you a head start on troubleshooting by helping you know which solutions to focus on.

- Your GPU is damaged or broken. Sometimes a part can be so damaged that it simply doesn’t turn on and function anymore. You can’t always tell whether the GPU is working with a quick visual inspection. Sometimes it may look just fine but be completely inoperable.

- Sometimes a driver update introduces a flaw into the system that makes it impossible for the device to function.

Other times, an update introduces a fix for a problem you might already be experiencing. The only way to know for sure is to do a little investigation work on the drivers.

- The graphics card was manually disabled. You can prevent devices in your system from working by disabling them, but they need to be manually enabled once you’re done.

- The wiring and hook-up aren’t done right. Sometimes the first installation doesn’t take well and must be done again. Anything from a loose wire to the card touching the wrong part of the case might make it cease to be detected.

Some of these steps are only available if you can access the GPU’s computer. If you don’t have integrated graphics on your motherboard, your screen might not turn on if the GPU isn’t working. That means you’d have to focus on solutions that don’t require Windows.

What’s the Best Way to Troubleshoot My GPU?

If it’s a new GPU or a new build, consider taking it apart and hooking everything in again. If you’ve been using the computer for a while and it suddenly stopped working, you can use solutions within Windows to try to fix it before opening up your case.

Remember that computer components can become worn, dirty, or damaged over time. Keep an eye on your GPU and make time to blow it free of dust occasionally. Doing so will not only help it run cooler but will also help you avoid problems like this.

How to Make My Computer Detect My GPU

To make your computer detect your GPU, ensure everything is connected correctly, running, and clean. Once those issues are taken care of, and you’re sure the GPU works, you can start looking for other solutions.

As always, read the error message you’re getting before you start. Sometimes it will tell you directly what the issue is. Other times, users realize that the GPU isn’t being detected only when greeted with a black screen on their monitors.

Check Your Hookups

Check the hookups from your graphics card to the power source and motherboard before doing anything else. These need to be plugged in firmly. They also need to be in the correct ports.

If you aren’t 100 percent positive your GPU is hooked up right, check the manual and pay special attention to the power requirements and hookup schematics. Remove each plug from its port and then plug it back in. Remember that some GPUs need special power hookups; ensure yours is getting enough power to function.

You don’t have to close up your tower before testing to see whether the GPU is detected once you’re done with the hookups. Leave it open in case you need to reseat it.

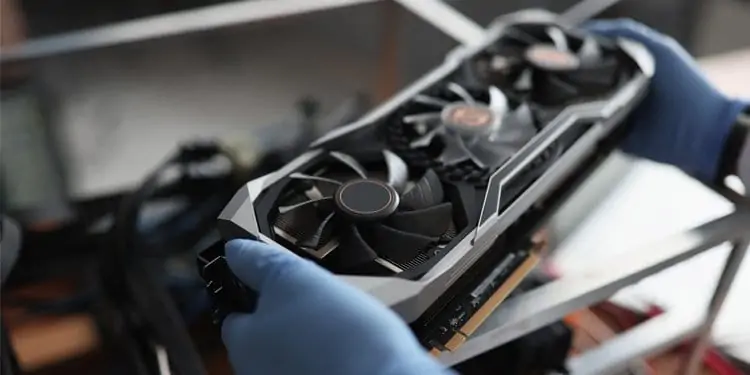

Reseat the GPU

Sometimes the way the GPU is placed in the computer can create problems with the connection. By taking it out and carefully putting it back in, you may be able to fix issues that arise.

Factors like weight and heat can affect the position and placement of a GPU over time. These changes can cause serious issues, including removing the connection between the GPU and the motherboard. Taking it out, removing any dust or dirt, and putting it back into place can fix these problems and make your computer work again.

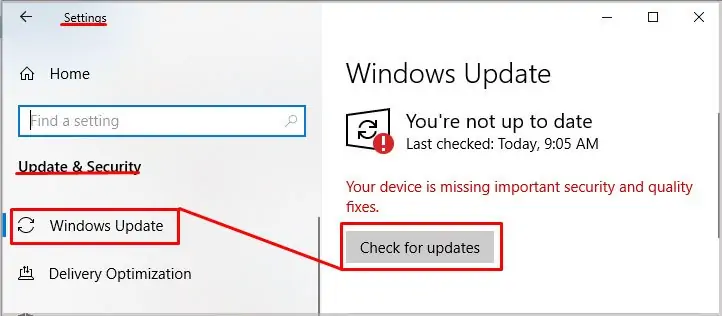

Update Windows

Graphics cards only work with specific versions of Windows and don’t promise compatibility with another. Check to make sure Windows is up to date before troubleshooting anything else if that’s the issue.

- Press Windows key + X.

- Click Settings.

- Choose Update and Security.

- Choose Check for Updates.

There’s no guarantee that one will be available. You’ll likely have to restart your computer to complete the installation process if it is. - Follow the steps to finish.

Once Windows is updated, try using the graphics card again. If the message that it wasn’t working came up in a specific program, use that program. Do whatever you must to see whether it’s working now.

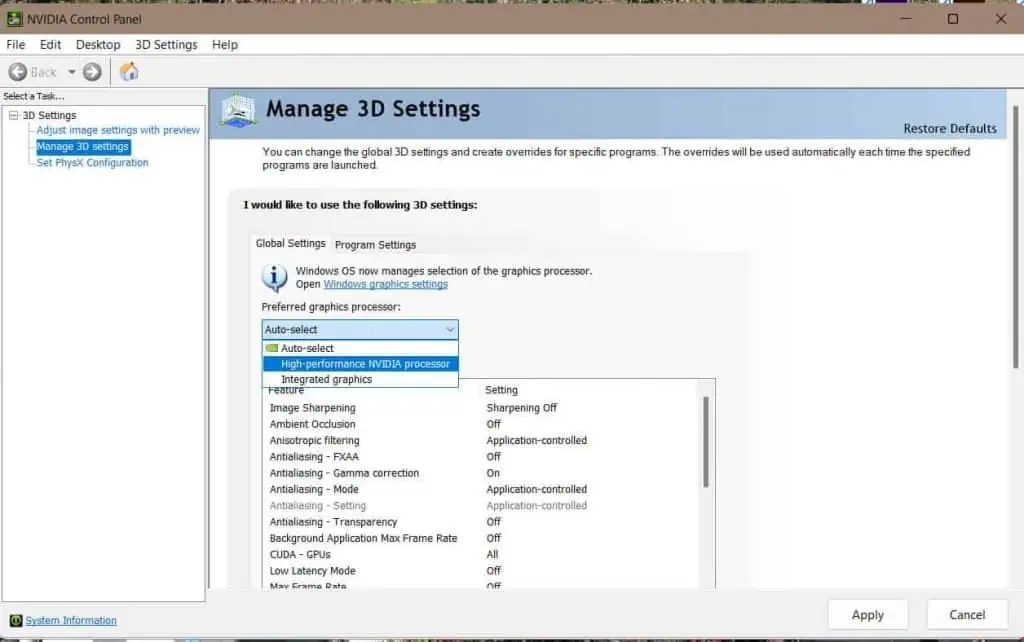

Check Which Graphics Card Is Selected

If your motherboard has integrated graphics, you might not have the other card selected. People who have both types of graphics card available on their systems often switch back and forth between them. For example, if you have an NVIDIA GPU and integrated graphics on your motherboard, you can choose which is active with the NVIDIA Control Panel.

- Right-click on your desktop and choose NVIDIA Control Panel.

- Choose Manage 3D Settings in the left pane.

- Select the drop-down menu by Preferred Graphics Processor.

- Choose your graphics card.

Your graphics card should be active, and your computer should detect it. If you don’t see it as an option, your motherboard likely isn’t detecting it at all, and the problem is the connection between the two devices or an issue with one.

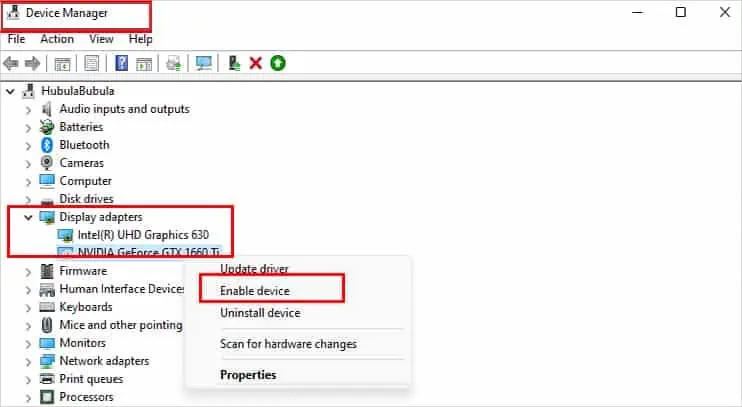

Enable the Graphics Card

Since you can disable hardware in your system, sometimes the root of a component not working is simply that it’s disabled. Those items set to disabled simply don’t function until you enable them.

- Press Windows key + X.

- Choose Device Manager.

- Expand the list by clicking the arrow next to the computer’s name.

- Unfold the Display Adapters category.

- Right-click the GPU if it appears here.

- Choose Enable.

- Restart your computer.

Once the computer is back on, the graphics card should work. If it is already enabled, the option won’t appear, and you can simply close the Device Manager.

If the GPU isn’t showing up in the Device Manager, the computer doesn’t detect it at all. It is as if it doesn’t exist – and that means you’re unlikely to be able to troubleshoot it within Windows. While it doesn’t guarantee a hardware problem, it makes it more likely such a problem is your issue.

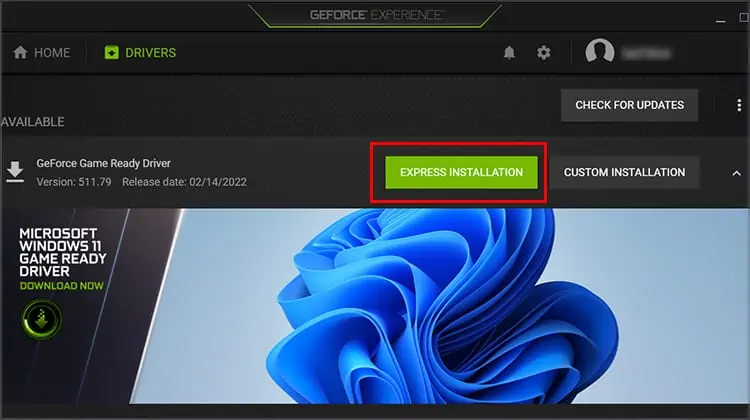

Update the Drivers

Your computer uses drivers to force each component to speak the same language. Every piece of hardware in your computer has different drivers. The process to update them can vary for graphics cards a bit.

While you can update them in Device Manager, the better way is to use the GPU Management software that came with your card or is free to download for it.

For example, GeForce users can open the GeForce experience to check for GPU updates.

- Open the GeForce experience.

- Click the Drivers tab.

- Click Download if a driver update appears. If it says you’re up to date, you can close the program.

- Wait for the program to download the update. Depending on your speed and its size, it may take a while. Most should be quick, though.

- Choose Express Installation. You could choose Custom Installation if you prefer, but the express option is quicker and more suitable for most people.

- Click Yes if prompted to confirm.

- Wait for the process to complete and restart your computer.

Sometimes your monitor may flash or go black during a GPU driver upgrade. Don’t worry! It’s completely normal and part of the process. Just wait until it’s done before doing anything else on your computer.

Try a Different Slot

If your motherboard has an available PCIe slot, try installing your graphics card on that slot instead of the original one you chose. These slots can fail individually, meaning that one might be operable and another might be broken.

If your GPU isn’t detected, it might be because the slot that connects it to the motherboard is incapable of detecting it. Try using the other slot and then booting up your PC again. If it works, it means you have a broken PCIe slot. You’ll have to fix it or possibly swap to a different motherboard.

Check for Conflicting Program

Any program that changes your GPU settings can be an issue preventing your computer from recognizing the card.

Some users noticed their GPUs didn’t show up when iGPU was active in Armory Crate. They had to switch it on, restart, turn it off, restart, and update their drivers on their displays listed in Device Manager.

This isn’t the only program that might create issues for users. Check for any programs that change the display properties and disable them or adjust your settings to see whether your GPU is finally detected.

Clean the GPU and Manage the Thermals

Sometimes a GPU can be damaged by long periods of work and heat. If you are having a problem with your GPU being detected, it might be that it needs a little work.

If it isn’t under warranty and you’re comfortable with it, consider taking it out and cleaning it to ensure nothing obstructs the fans. You can use compressed air to blow them free of any mess. Use your finger to make sure they can freely spin to keep the card cool.

Consider taking it apart and replacing the thermal paste or pads if it’s an older card. You want to replace the screws tightly. Don’t bend the card, but don’t screw them in too gently either. The card must be held together firmly after the new thermal paste and pads are applied.

Reach Out for Help

If you’ve done all of these things and your GPU still isn’t working, you may have a dead card. If it’s under warranty, reach out to the company you purchased it from and discuss options with them. They may have some specific troubleshooting tips to help you get your GPU to work.

Frequently Asked Question

Why Is My GPU Not Being Detected by My Monitor?

If your monitor isn’t seeing your GPU, it might be that your cable isn’t working. Sometimes an HDMI cable stops sending the signal, and then you don’t see any input from your computer. It could also be that you’ve selected the wrong input.

If you can see integrated graphics from the motherboard, but the GPU graphics don’t display, you could also try using another port. If a different port works, either your cable or GPU port is broken.

Why Won’t My GPU Show Up in Task Manager?

If you’re running an old version of the WDDM driver, your GPU won’t appear in Task Manager. You need to upgrade to WDDM 2 to see GPU information. Check to see whether your computer and system support it before attempting to upgrade.

How Do I Enable My HDMI Port on My GPU?

You shouldn’t have to enable it because it should always be active. When you plug in an HDMI cable to the back of the GPU, it should work without any other input.