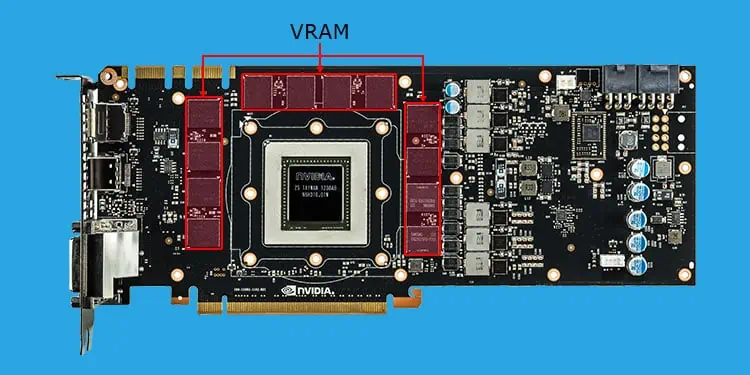

A graphics processor comes with Video Random Access Memory (VRAM) that acts the same as RAM does for a CPU. VRAM loads textures, shaders, and other graphics data directly from the storage disks and passes them to the GPU for rendering the display.

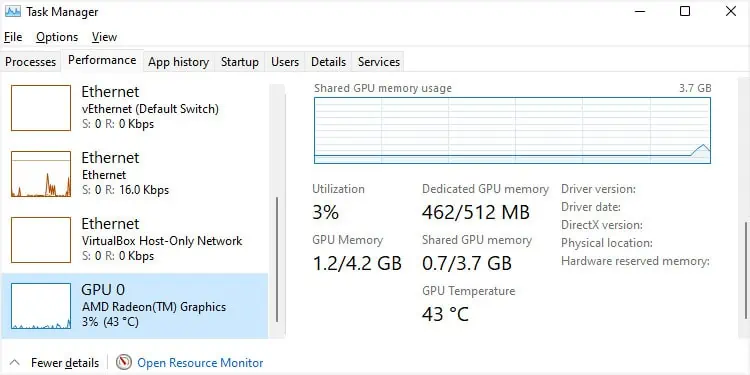

However, GPUs have limited capacities—if you run tasks that require more video memory, the processor runs out of its available VRAM. In such cases, it starts using some space (usually half) of the system RAM as virtual video memory, which is the Shared GPU Memory.

Why Does GPU Need Dedicated VRAM or Shared GPU Memory?

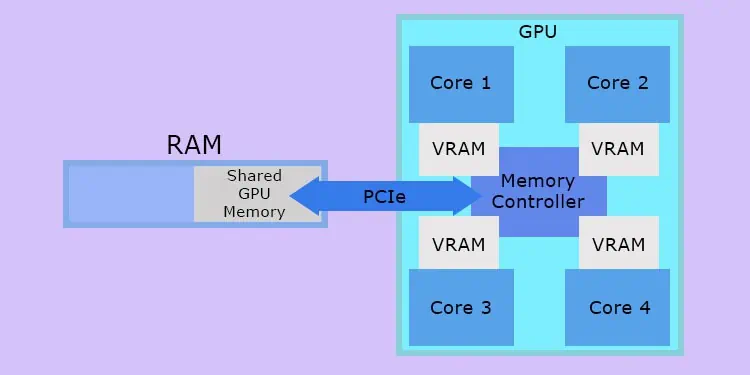

Unlike a CPU, which is a serial processor, a GPU needs to process many graphics tasks in parallel to render the graphics. A single render will need multiple textures, shaders, lighting elements, as well as post-processing features like filters. Processing all these elements to properly and quickly render the display requires numerous cores running in parallel.

And before handling these elements, it needs to get them from the storage disks where they are stored. That’s where the VRAM comes into use. VRAM modules access all these data very quickly from the storage device and create buffer-like pipelines to push them into the GPU.

And if the dedicated VRAM modules are not free to perform this task, the computer has to use parts of the system RAM as virtual VRAM instead.

Most integrated GPUs (iGPUs) don’t have dedicated VRAM or only have a limited VRAM capacity. So, if you only have an iGPU on your computer, your system will definitely be using the shared GPU memory for most graphics processes.

Before this technology was used, running intensive graphics tasks frequently caused Blue Screen of Death errors (BSODs) due to insufficient video memory.

How is Shared GPU Memory Different from Dedicated Video Memory?

The graphics processor’s VRAM is the fastest device in any computer system (as long as you don’t use extremely old GPUs). RAMs come next in line when it comes to speed. So, the shared GPU memory can never provide as good of a performance as dedicated VRAM.

Apart from that, VRAM modules are parts of the graphics processor and are closely linked with the GPU cores while RAMs need to use the PCIe connection to send data to the GPU. This further affects the performance of the shared GPU memory.

Another thing is that whenever your system needs to use the shared GPU memory, it needs to cut off the maximum RAM capacity. This can lead to more performance hits or may even bottleneck other components like the CPU or the GPU itself from operating with its maximum potential.

Should I Configure Shared GPU Memory?

A few devices do include the option to configure Shared GPU Memory settings in their BIOS. However, it is not recommended to change this setting regardless of whether you have sufficient dedicated VRAM or not.

If you have enough VRAM, your system will not use the shared GPU memory unless needed. And even using the memory does not reserve the complete half of the RAM, only the part it uses. So, there’s no need to change the setting.

And if we consider a graphics processor with insufficient VRAM, your computer automatically allocates the necessary space from the RAM as Shared GPU Memory space. The parts of this space will act as the virtual VRAM when reserved by the GPU and as the RAM otherwise.

The automatic allocation takes into consideration the balance between the required RAM as well as the VRAM, so it’s best not to touch this setting at all. Otherwise, you may encounter crashes or lags while running graphics-intensive applications.